I was reading a description of different advanced analytics methods and I felt there was something quite wrong. I had hard time putting my finger on it. Technically the descriptions were accurate, but at a more subtle level there was some kind of important error. In each case, the technique was described as finding something hidden; for example cluster analysis was described as a tool to find hidden clusters. That is so close to being totally correct that it’s dangerously wrong.

Think about what that word “hidden” means in an organizational context. As an analyst you are promising leadership that thanks to these wonderful tools you are going to find something they never suspected. You are promising that somewhere in those hills of big data, lies gold — and that you will come back with a bucket load.

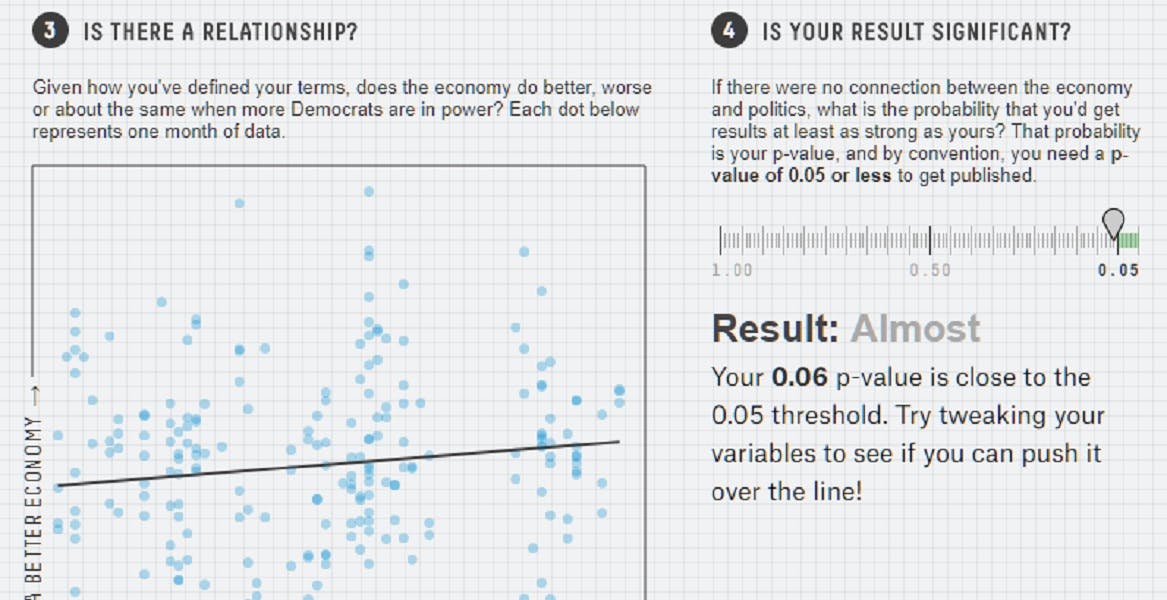

What is in those hills? Often what lies there are spurious findings, fool’s gold one might say. Look at enough data and some surprising apparent correlation is bound to show up. In academic research this is called “p-hacking.” The correlation is surprising, but unreal.

Want to see how that works? Try this gamified analysis to see how by manipulating the data you can get statistically significant, but meaningless results.

Other times what you find is, well, you’re not quite sure what it is. The findings are ambiguous. Those ambiguous findings could be better than nothing at all, or act as a hint as to where to dig deeper, but if you’ve promised a bucket of gold, then a bucket of somewhat interesting muck isn’t going to win you any points.

However, here’s what most likely in those hills: things management already suspects. Take for example Emir Dzanic’s excellent work on finding clusters of innovative subcultures in an organization. The analysis might find a hidden cluster, but in many cases it’s just as likely to confirm what management suspected. If you’ve promised to find some shocking hidden truth, then management will be disappointed. Now imagine if you’d said, “You’ve got some ideas on where innovation lies, let’s find out for sure with a rigorous look at the data.” Then you come back with your charts saying, “You were largely right, and now we can quantify it, and here’s something that extends what you felt.” Frame it that way and management will be thrilled.

I’m mixing the emotional reaction to the findings with the practical usefulness of them because both factors show up simultaneously in real world analytics. The rules on handling the emotional side are clear: Don’t over-promise, and if you can prove people right then they are likely to be happy. The practical side may be less clear; if managers knew the answer then why do the analysis? The reason is that it would be more accurate to say that they “suspect” a certain answer than say they “know it.” The analysis tests if that suspicion is correct. Furthermore, it can add quantitative insight. It is valuable to bring rigor to the process; let’s not doubt that.

Another side of this is respect for the wisdom of managers who are on the front line. It is easy for a MIT educated data scientist armed with powerful statistical tools to feel they don’t need insights from the field. Yet we should work from the presumption that people on the front lines often do know very well what is going on. Our goal is to help build on their insights and perhaps occasionally surprise them by finding that something they suspected was true is not and even occasionally turning up some hidden gold they had no inkling was there.

Task your analytics team with bringing rigor to finding things out and to testing what manager’s suspects. Don’t set them up to fail with the difficult goal of uncovering startlingly new findings.

∼∼∼∼∼

Special thanks to our community of practice for these insights. The community is a group of leading organizations that meets monthly to discuss analytics and evidence-based decision making in the real world. If you’re interested in moving down the path towards a more effective approach to people analytics, then email me at dcreelman@creelmanresearch.com.