Is artificial intelligence really HR’s friend?

Can it really speed up all those monotonous HR jobs you hate to do – the ones that stop you from doing more strategic stuff?

Better, still, can it solve some of the biggest issues some tasks still present – such as issues around perpetuating bias?

In this series we’re going to start looking at different AI tools to try and answer exactly some of these questions.

Every few weeks, we’re going to look at and independently review a piece of HR AI.

First, our methodology:

The process works like this:

- We book a walk-through of a specific tool with an AI provider.

- We get a demonstration of its capabilities.

- We ask the sorts of questions we think HR professionals want to hear –and providers have their opportunity to respond.

- The verdict below is completely our personal assessment. It’s based entirely on what we see and whether we think our questions have been satisfactorily answered. The vendor has had no input.

So who’s up next?

Today we’re looking at:

Learnosity’s AI-assisted ‘Author Aide’ authoring tool.

What’s its big USP?

According to Learnosity – which creates assessment questions and reporting analytics to help organizations test the effectiveness of their L&D – the big problem facing HR professionals is how to design consistent high quality questions that get to the nub of testing what someone is supposed to have learned. It argues question creation can be wildly inconsistent and miss the mark when it comes to testing comprehension of learning materials. So it’s created a generative AI tool to create questions on HR professionals’ behalf.

Who took us through the tool:

Neil McGough, chief product officer at Learnosity.

The context:

McGough says: “Creating consistent assessment questions is a huge area of time and friction for many working in L&D. Question writing is a skills, but it’s something not everyone is experienced in, and so the quality of questions can fluctuate, meaning organizations can’t always gauge the effectiveness of their learning.”

He adds: “Often, HR professionals don’t have subject matter experts available to design questions, and so we felt there was a opportunity to solve this, speed question-writing up, improve the quality and consistency of questions, and create a better experience for candidates themselves. In all these ways, we can help organizations create better open or closed knowledge questions.”

What we saw:

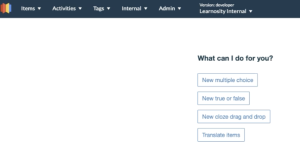

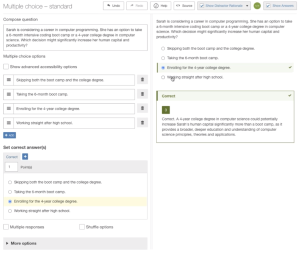

Learnosity comprises an authoring tool that uses ChatGPT to compose questions by looking at a company’s already uploaded subject matter material. Initially, users go through the options to decide – for example – if they want to create a multiple choice style test or something like true or false questions etc.

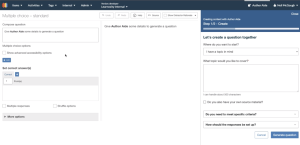

The main screen however, is the question authoring element, where users can ask it to generate a question using a few basic prompts – in our case we asked it to create a multiple choice question around the topic of financial literacy based on content pasted from existing learning material that was on a PDF.

To retain consistency, users can specify that the question meets a certain learning standard. In generating a question, it will confirm what is the correct answer and it will also present alternatives that are the incorrect answers.

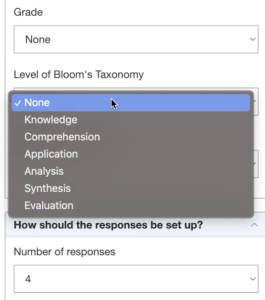

Learnosity uses Blooms Taxonomy – a set of three hierarchical models used for classification of educational learning objectives into levels of complexity and specificity. The levels are divided into ‘Remember, Understand, Apply, Analyze, Evaluate, and Create. Learnosity uses its own slightly different wording – see below.

In another example we did, we asked the authoring tool to create a question around human capital and performance.

We also asked it to create one around control station safety.

In time, says McGough, content won’t have to be physically pasted in, but the software will be able to access it by itself.

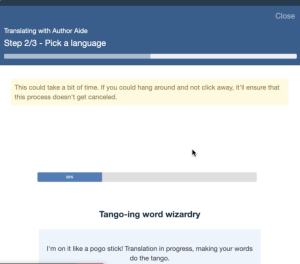

As well as showing us questions created, we also saw that the authoring tool is able to translate the question into multiple different languages to allow for localization of learning material testing. Learnosity claims it can do this translation more accurately and with better context than say, Google Translate.

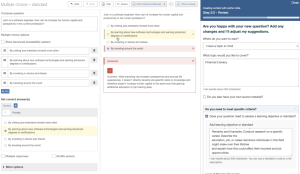

For a first time viewer, the obvious question is whether the question the tool actually creates is what akin to what the HR professional had in mind. If it isn’t, or it doesn’t do one the user thinks is applicable enough, McGough says you can ask it to have another go.

We asked if the user has to almost ‘learn’ how to write the ‘best’ initial prompt in order to get the best out of the question generator, and Learnosity says it has worked hard to “not make the question authoring hit and miss.”

He adds: “We’re not saying we’re perfect yet, and it will still need a bit more iteration, but we’re confident in the prompting that works behind the scenes. Currently, we have around 50 clients testing it for closed data questions, and are currently assessing this feedback.”

Initial reflections

There’s a lot – an awful lot – that one could be skeptical about when it comes to AI tools that claim to be able to create great content.

With this, however, what users have to mentally put aside is that this is not some clever form of mind-reading that will suddenly create the exact question you were already formulating, but were having trouble finding the right way of putting into words. That would be, well impossible.

What it is doing is looking at the learning materials you give it, and designing meaningful questions based on what the content is – questions that will test levels of comprehension up to a set level of complexity.

When you understand it like this, the tool does rather transform your perceptions – coming up, as it does, with very logical and very plausible questions designed to evaluate how well someone has understood the same learning material.

“There is definitely an AI-trust gap,” admits McGough, and he says this is something that will only slowly change over time. But he also argues tools like this will demonstrate what AI is capable of.

What he does admit however, is that you can’t simply create the question, and that’s it, done. There does still need to be a human to read the review the questions created, to confirm that the question makes sense and that the correct answers are indeed so.

But the point, he says, is that this end part of the process is something that can be sent to the subject matter expert to glance over and confirm very quickly. What the tool does is save the subject matter expert having to devote time to design the questions from scratch.

A common fear is that anything AI generates comes across as rather uninspiring and mechanistic and ‘flat’ to readers when they read this stuff to themselves. Clever question writing should be certainly be clear, but there should arguably be some room for what otherwise might be known as ‘flair’ – ie to give the reader a better experience.

Says McGough: “While a jovial tone would probably not be appropriate for a test scenario, there is the ability in the software for users to be able to prompt the question generator to be formal, factual or supportive. This functionality is not currently ‘switched on’ at the moment but I think it will go back in, in some form or other, eventually.”

An obvious fear is whether the AI could get itself in a twist, and inadvertently word the question in such a way so as to appear to be asking a trick question (when in reality it isn’t), causing users to think that there could be several right answers.

This though, says McGough, is where the human review stage is critical still, but he adds: “There are still limitations in AI, but this is one area where I think the quality will quickly improve.”

Conclusions

TLNT is big enough to admit it went into this being ever-so slightly skeptical.

However, having seen what the technology can do, we’re very much eating humble pie, and have become converts.

We suggest that seeing really is believing, and that anyone interested in this should make their own inquiries to test it themselves.

The good news is that according to McGough, development of the product is still very much ongoing and will be reflective of user feedback.

The tool is already looking how it can remove bias and improving DEI in the wording of questions. Preliminary testing is – claims Learnosity – successfully flagging up context for potential biases.

McGough also says he can’t see any technical reasons why questions can’t be pegged to exam standards, so that internal, proprietary leaning materials can convert to academic points that build up to certain recognised qualifications. This is something he says they might well be looking into in the future.

In terms of achieving its main goals – to reduce friction; speed up question writing, and standardize the consistency of questions – it achieves on all levels, and to a very satisfying degree.

According to Learnosity, one further benefit of the authoring tool is that employers can retrospectively put all their existing L&D content through it.

This gives employers the chance to have Learnosity’s question generators to give pre-existing learning (with low quality associated questions) a new lease of life.