Is artificial intelligence really HR’s friend?

Can it really speed up all those monotonous HR jobs you hate to do – the ones that stop you from doing more strategic stuff?

Better, still, can it solve some of the biggest issues some tasks still present – such as issues around perpetuating bias?

In this series we’re going to start looking at different AI tools to try and answer exactly some of these questions.

Every few weeks, we’re going to look at and independently review a piece of HR AI.

First, our methodology:

The process works like this:

- We book a walk-through of a specific tool with an AI provider.

- We get a demonstration of its capabilities.

- We ask the sorts of questions we think HR professionals want to hear –and providers have their opportunity to respond.

- The verdict below is completely our personal assessment. It’s based entirely on what we see and whether we think our questions have been satisfactorily answered. The vendor has had no input.

So who’s up next?

Today we’re looking at:

Oyster’s ‘Pearl’ AI

What’s its big USP?

According to Oyster – which helps organizations hire compliantly across 180 different countries – the global employment landscape continues to be something of a minefield, but one where there are very real (legal) consequences for getting pay, tax and benefits wrong. But the problem with international recruitment is that a company may have a very specific question, but trawling through pages and pages of online material may still not be able to provide the answer. Pearl is Oyster’s AI tool aims to solve both these issues by presenting the information HR professionals actually need quickly, by allowing HR professionals to simply ask a question as they have it in their mind. The tool promises to be able to understand the context of the question, and retrieve and write an answer that directly responds to that question.

Who took us through the tool?

Michael McCormick, SVP of product and engineering

The context:

“There’s lots of international recruiting guides, and pages of data, but how often does a piece of existing content convert a very specific question an HR officer might have into a clear answer?” says McCormick. “We thought a better approach would be to use a conversational interface – ie like a chatbot.”

What we saw:

We were introduced to the Pearl AI ‘beta’ interface – one that anyone can access on Oyster’s current website.

This chatbot is exactly how you would expect any typical chatbot you might have experienced to look like – with a pop-up box and a prompt that invites you to ask any question you like.

At this point, McCormick explained that to answer a question someone might pose, the AI tool is mining all the content that exits in Oyster’s own existing country hiring guides and remote work regulation document – content that users would otherwise have to read in detail and which still might not precisely tell them what they want to know.

The longer-term aim however, is for the AI tool to incorporate information from an employer’s proprietary global employment data as well as insights from The Reef – an open-source employee guide with best practices for building distributed, asynchronous teams.

It’s also worth noting one important proviso: “It’s not designed to give a 100% correct answer, but it’s there to create a dialogue around the sorts of information HR professionals will need to make their hiring decisions,” says McCormick.

When pressed, about whether this diminishes the power of the tool, McCormick added: ”In all likelihood, the answer will actually be correct, because it is responding to how an ordinary person might ask a question and pulling off our own content to answer it. But we maintain that it will still need checking. The main job of the tool is that the AI is digesting what has been asked of it, to quickly present the sorts of initial data that HR folk will need to start their decision making.”

To ensure it responds with an appropriate answer, Oyster has worked with CommandBar, which provides AI assistance technology. “Its technology trains the conversational AI to our content,” says McCormick. “The entire process has probably taken around 3-5 months from vision to first use,” he adds. “What it’s really dealing with is working out how many different ways someone might ask the same question. There is an almost infinite way people can request a particular piece of information, so the AI looks for context, then maps reference data to semantics and words.”

So how did it fair?

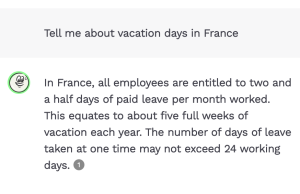

Initially we asked it the following question: “Tell me about vacation days in France?”

Note how we didn’t actually ask it a specific question – like ‘how many days’ holiday do people get in France?’ The latter question might have been a more precise question to ask it, but we wanted to see how someone might casually ask this sort of broad holiday question.

Surprisingly, it actually gave us a very thorough answer – see below.

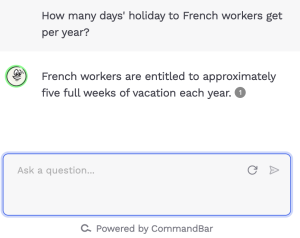

When we asked a more specific question, it gave a more specific answer. Rephrasing our question to: “How many days’ holiday to French workers get per year?” and the answer was much more concise.

Arguably, it isn’t as definitive as the first question, but that’s because we weren’t being general. The AI seemed to know this. The more conversational-style first question created a more conversational and broad-brush answer.

But what about more complex questions?

It’s human nature to ask a main and then a supplementary question – we don’t even recognize that we are doing this. Could the AI handle this?

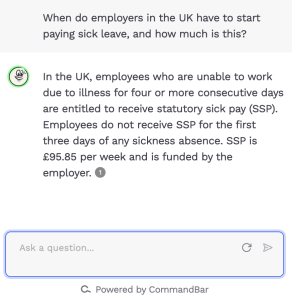

To give an example of this, we typed in the following question: “When do employers in the UK start pay sick leave, and how much is it?”

This is – in theory – a much more complex question; it has two parts, and it might have thrown the AI off.

But to our surprise, it handled it more than adequately, explaining that: “employees who are unable to work due to illness for four or more consecutive days are entitled to receive statutory sick pay (SSP). Employees do not receive SSP for the first three days of any sickness absence. SSP is £95.85 per week and is funded by the employer.”

That couldn’t have been more concise if it tried. It perfectly answered the stated question (which it interpreted with apparent ease).

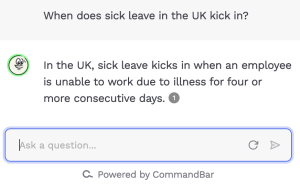

TLNT decided to try and challenge it even more. We asked: “When does sick leave in the UK kick in?”

Notice how we deliberately didn’t say ‘start’, but tried a more colloquial ‘kicks in’.

This might be how someone wants to say ‘start’.

To our surprise again (but perhaps it shouldn’t be by now), it passed with flying colors. It even answered our questions by using the term ‘kicks in’ – presumably to continue the same conversational tone. Very impressive.

Conclusions:

When AI actually ‘works,’ it really does have the capacity to blow your socks off.

Perhaps TLNT is late to the party around what AI can do, and maybe this may not impress those who are more used to what conversational AI can do.

But the successful marriage of this with complex global employment law does feel like a breakthrough. The fact answers are given to questions as they would naturally be asked, really does feel like anyone can get the sort of information they need, without first having to think about how they need to craft the question in the first place. They just need to type what first comes to their mind.

It’s worth noting that we did ask it some questions where an answer couldn’t be given. (Here the option was given to talk to one of their people direct). However, this was more, explained McCormick due to the content the AI was mining not holding this information, rather than it not being able to find it. Had the information been there to mine, it would have retrieved it, and crafted an appropriate response to the question.

So yes, this does mean the tool’s performance is pegged against the amount of information that the AI is being asked to trawl.

But the beauty of the AI, is that as Oyster updates its own resources, there’s no need to create new information ‘trees’ and relationship links that would previously have had to happen in the past. The AI will just automatically find the new information if a questioned pertaining to it is asked.

According to McCormick, clients currently testing it are also blown away with how it can handle natural language requests, and he reports that popular questions tend to be around understanding variations in time off/leave, notice periods and pensions/salary contributions between different countries.

“Clients using it are able to get the reassurance they need that if there are any weird rules they don’t know about, they are highlighted, and more research can be done,” he says.

One interesting other observation, adds McCormick is that the AI helps grow people’s confidence. “Clients aren’t embarrassed about asking the AI a question. It’s non-judgemental, and doesn’t make people feel foolish for asking a question,” he observes. “I think people can still often feel ashamed about revealing they may not knowing something if they have to ask a human!”